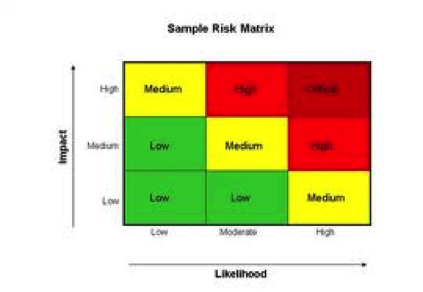

This is a new paper, co-authored with colleague John Watt, and published in the journal Risk Analysis (2013, Risk Analysis 33(13): 2068-2078). It examines that widely-used tool the risk-consequence matrix, sometimes simply referred to as a risk matrix. These devices are everywhere and there are hundreds of versions around which vary mainly in the number of cells in the matrix (these commonly range in size from 2×2 to 10×10) and the colour scheme used to denote the different combinations of risk and consequence. Exponents of these devices believe, amongst other things, that they help users prioritise their activities.

We do not share this view. Along with a growing number of authors we find these matrices to be misleading and verging on or worse than useless. As the abstract says:

“Risk matrices are commonly-encountered devices for rating hazards in numerous areas of risk management. Part of their popularity is predicated on their apparent simplicity and transparency. Recent research, however, has identified serious mathematical defects and inconsistencies. This article further examines the reliability and utility of risk matrices for ranking hazards, specifically in the context of public leisure activities including travel. We find that (a) different risk assessors may assign vastly different ratings to the same hazard, (b) that even following lengthy reflection and learning scatter remains high, (c) the underlying drivers of disparate ratings relate to fundamentally different worldviews, beliefs and a panoply of psycho-social factors which are seldom explicitly acknowledged. It appears that risk matrices when used in this context may be creating no more than an artificial and even untrustworthy picture of the relative importance of hazards which may be of little or no benefit to those trying to manage risk effectively and rationally.”